videostil: Bringing Video Understanding to Every LLM

Turn videos into structured LLM-ready data with configurable frame extraction, smart deduplication, and visual debugging—all open source.

Large Language Models have revolutionised how we process text, images, and even code. But when it comes to video? That’s where things get complicated.

We hit this wall hard at Empirical. We help engineering teams ship faster with AI agents that write, maintain, and run end-to-end tests using Playwright. Our coverage agents ingest video recordings for user scenarios that need to be converted into automated tests. When tests fail, our maintenance agents analyse Playwright video recordings for self-healing.

The problem? Current LLMs either don’t support video input at all, or when they do, we have no control over how they process it—which leaves us blind to critical details that impact accuracy.

Building effective agents requires context engineering, and we wanted a better way to fit videos into LLM context windows. To do this, we’ve built videostil.

The landscape: Limited options

Gemini is the only LLM that supports native video ingestion, and they offer some control with configurable FPS and start/end time offsets. However, you still can’t see which frames are being processed or control deduplication—it’s a black box that makes debugging and optimization challenging.

OpenAI’s GPT-4o was announced with video understanding capabilities, but here’s the catch: the API doesn’t actually accept video files. You still need to manually extract frames (they recommend 2-4 FPS) and send them as image arrays. You’re doing the preprocessing anyway, but with less control. This has not changed with newer OpenAI models, and the same applies to Anthropic’s models.

Getting started

The simplest way to try videostil is to use npx and pass a video URL to it. videostil supports custom parameters like fps and threshold, see docs to learn more.

npx videostil@latest https://example.com/video.mp4videostil also provides an API that can be imported in your Node.js applications. Internally, we have wrapped videostil as a tool call that our agents use.

import { extractUniqueFrames } from 'videostil';

const result = await extractUniqueFrames({

videoUrl: 'https://example.com/video.mp4',

fps: 25,

threshold: 0.001,

});

console.log(`Extracted ${result.uniqueFrames.length} unique frames`);How videostil works

videostil extracts frames from videos and analyzes them using any LLM provider you choose. Built on battle-tested FFmpeg, it gives you complete control over the entire process.

Frame extraction and deduplication

- Configurable FPS: Extracts at 25 FPS by default (matching Playwright test recordings, a popular browser automation framework), but fully configurable

- Selective processing: Specify

startTimeanddurationto analyze specific portions instead of entire videos - Deduplication: Since LLM context windows are strict, videostil deduplicates video frames and exposes a configurable similarity threshold that becomes a knob to choose how many frames will get extracted.

LLM analysis

Extracted frames are then sent to an LLM, through our @empiricalrun/llm package. This our in-house gateway that can connect with multiple LLM providers, and you can run it too, by bringing your own API keys.

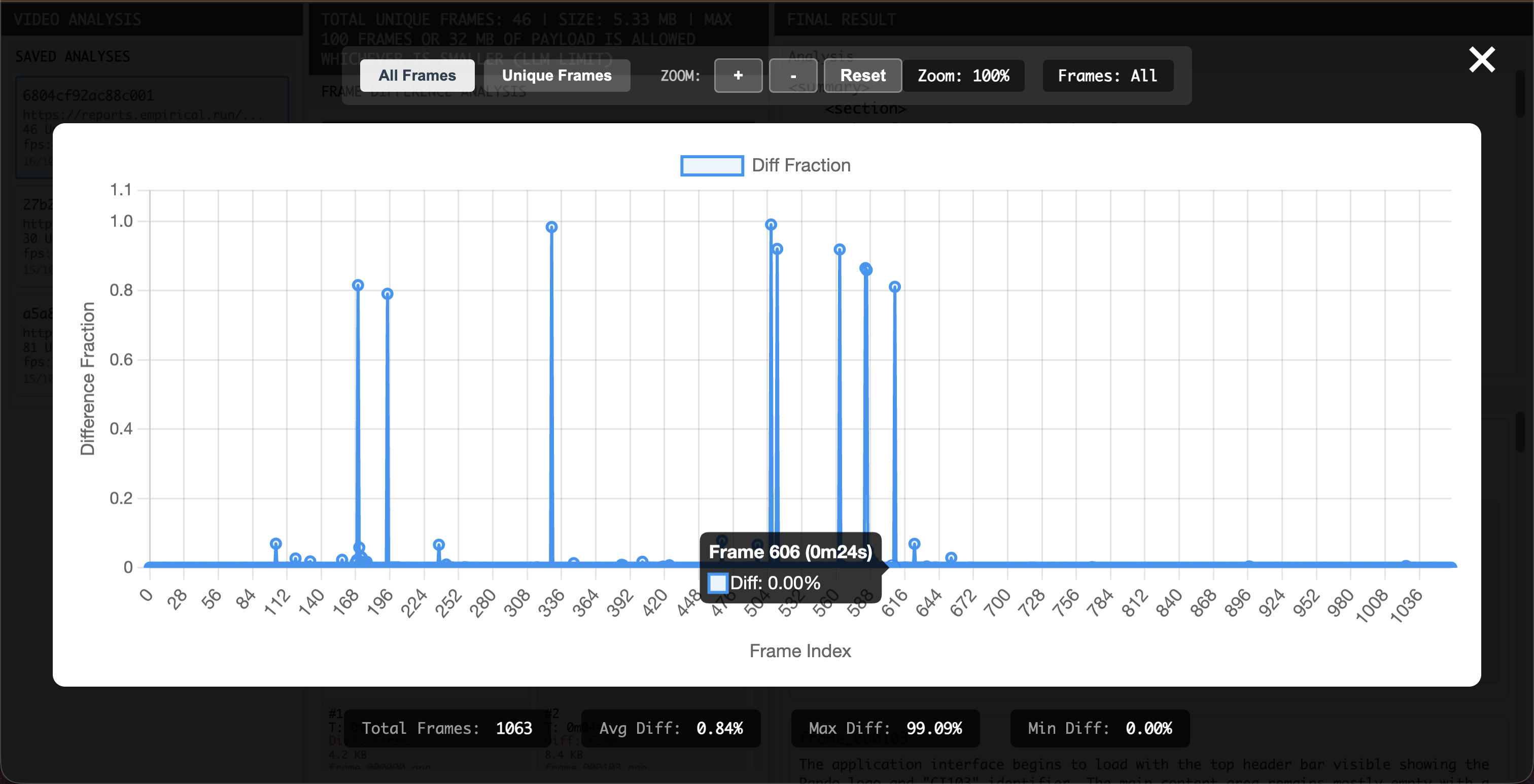

Visual debugging

videostil includes an analysis viewer that lets you see which frames were extracted and deduplicated, against the LLM’s output.

This visual feedback loop lets you rapidly iterate on your parameters instead of blindly tweaking settings. We designed the UI viewer and npx CLI together for one reason: fast iteration. When you can see what’s happening and adjust quickly, hard problems become tractable.

What’s next

videostil is open source and freshly launched. While we built it for test automation at Empirical, the applications extend far beyond our use case. As LLMs become more integrated into every aspect of work, we believe video understanding needs to be accessible to everyone, controllable and transparent, and optimized for real-world constraints.

We will continue to invest in videostil: optimize deduplication algorithms, make it easier to deploy as a service, and make the analysis viewer better in different ways. We’d love to involve the larger developer community and mould our roadmap to make videostil work for us all. If you find this interesting, share your use-case or feature requests on GitHub.

Whether you’re converting cooking videos into detailed recipes, extracting step-by-step tutorials from screencasts, analyzing user behavior recordings, generating documentation from demo videos, or exploring entirely new scenarios where you need structured data from video content—we’d love to see what you build.